Our caching layer is performing well, with high cache hit rates significantly improving API response times. When data is served from cache rather than requiring database queries or API calls, response times drop from hundreds of milliseconds to single-digit milliseconds.

Understanding Cache Hit Rates

A cache “hit” occurs when requested data is found in cache memory, allowing immediate delivery without accessing slower backend systems. High cache hit rates indicate efficient caching strategies and predictable access patterns.

Current Performance Metrics

- Cache hit rate: >85% of requests served from cache

- Average response time (cached): 8ms

- Average response time (uncached): 340ms

- Cache size: Optimized for frequently accessed aviation data

Caching Strategy for Aviation Data

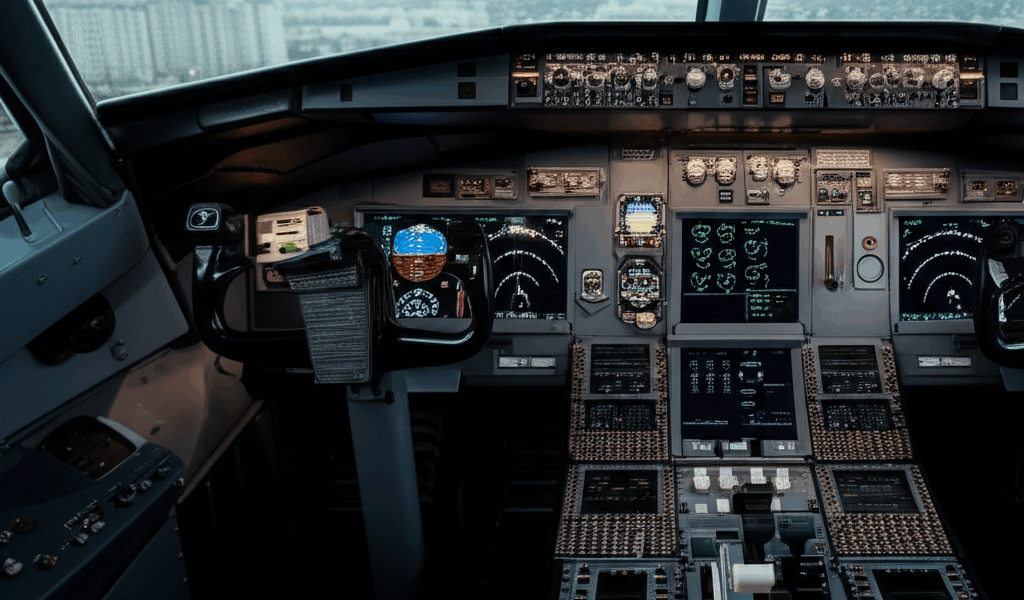

Aviation data presents unique caching challenges – some data (aircraft specifications, airport information) is relatively static, while other data (real-time positions, flight status) changes constantly.

Our Caching Approach

- Static data: Long TTL (Time To Live) for unchanging information like aircraft types and airport codes

- Semi-static data: Medium TTL for data that changes periodically, like flight schedules

- Real-time data: Short TTL or cache invalidation for rapidly changing position data

- Computed results: Caching expensive calculations and aggregations

Performance Impact

Effective caching delivers multiple benefits:

- Reduced latency: Faster response times improve user experience

- Lower database load: Fewer queries reduce infrastructure costs

- Better scalability: Cache can handle traffic spikes that would overwhelm databases

- Improved reliability: Cached data remains available even during backend issues

As our API usage grows, intelligent caching becomes increasingly critical for maintaining performance at scale. We continuously monitor cache metrics and adjust strategies to optimize the balance between freshness and performance.